Technical SEO Checklist: 10 Essential Tips to Get Better Ranking

- Posted by: Poorti Gupta on 06 Nov, 2017

Technical SEO deals with how robots crawl and index your website. Apart from on-page and off-page techniques, you must give equal importance to technical SEO in order to get better crawlability, indexability, and ranking.

In this post, you’ll discover

Firstly, check how many pages of your websites are being indexed in search results. You can check the results by writing following search query “site:domain.com”.

Observe the results. The numbers should be near to a total number of pages in your site minus the pages you restricted from indexing. If the outcome shows greater difference than what you expected, you need to review the blocked pages.

It is vital to check that all your important pages are crawlable and not blocked. Look at robots.txt, noindex meta tag, orphan pages, and X-Robots-Tag to find out blocked resources.

Remember that Google can now crawl all kind of information. If your CSS files are restricted from crawling than Google won’t see pages the way they look. Similarly, Google won’t index any dynamically created content if your JS is disallowed from crawling.

Things to look at while auditing

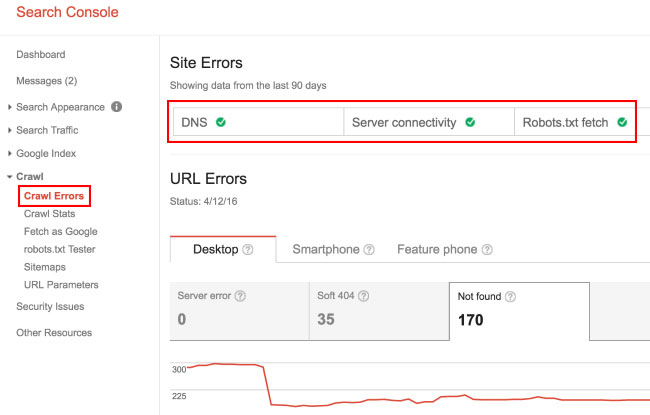

- Monitor “Crawl Errors”, “robots.txt Tester”, and “Fetch as Google” under “Crawl” section in search console to find pages and resources that are blocked from crawling.

- Inspect for orphan pages. Orphan pages are those pages that exist on your site but aren’t linked from any other page (as in internally).

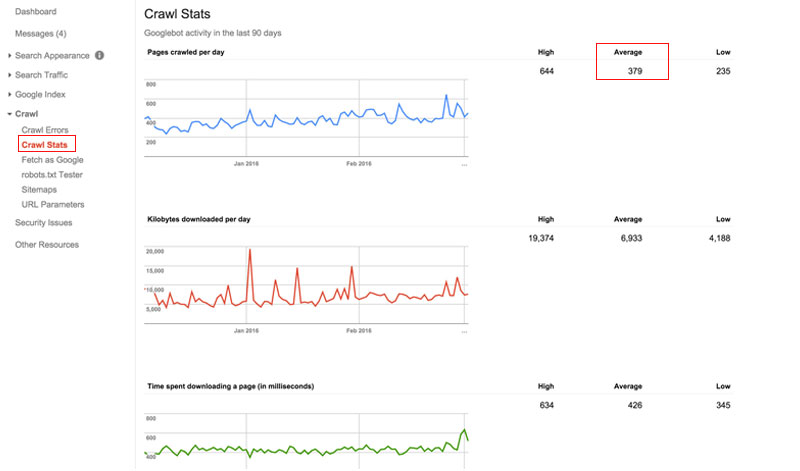

Crawl budget is referred as the number of pages search engines crawl during a given period of time on your site. To check your crawl rate, open “Crawl Stats” under “Crawl” section in Google Search Console.

An increase in crawl budget indicates Google is interested in your site which ultimately can grow organic traffic and improve your search ranking.

Things to consider when optimizing Google Crawl Budget

- Make sure that your site loads faster. This gives two advantages: first, a better user experience and the second increase in crawl rate as slow loading speed are crawled less.

- Clear duplicate content.

- Important pages are crawlable and least significant pages disallowed from crawling.

- Avoid too many redirects

- Fix broken links

- Update sitemap if any changes are made

- Keep track on URL parameters. Many times a dynamic URLs point to the same page which in the eye of bots are considered different page as a result crawl budget is wasted. You can acknowledge bots about these parameters by adding parameters in “URL Parameters” under “Crawl” in search console.

- Monitor “Crawl Errors” report under “Crawl” in search console to fix any errors

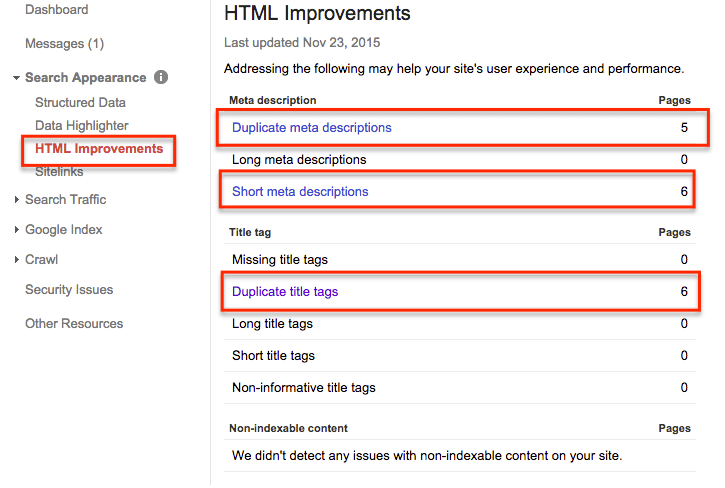

Duplicate content is a most crucial problem faced in SEO as it lowers the site ranking and users.

The same content found on different URL of the same site is considered as duplicate content, for example:

www.example.com/blog/technical-seo

www.example.com/blog/tag/technical-seo

www.example.com/blog/tag/category/technical-seo

This all pages will be indexed by spiders and marked as duplicate content. In this case, you can add attribute rel=”canonical” to each section where there’s duplication also tell bots which page to consider and show to the user.

Below is the implementation code:

<linkrel=”canonical” href=” www.example.com/blog/technical-seo”>

Other issues can be: a site having www and non-www version of it or a paginated page is indexed.

Here, are some points to consider when dealing with duplicate content

- Delete or rewrite duplicate content

- Check “HTML improvements” reports under “Search Appearance” in search console to find duplicate content issues

- Use Canonical tags

- Proper 301 Redirect

- Have an effective pagination strategy

A good site structure will help you rank higher in search engine while a bad architecture will lower your ranking.

Well-designed site architecture gives a great user experience, improves your sales and helps bots easily crawl your website.

Here, are some points to consider

- HTTPS

Google rank higher those sites which provide trustworthy and better user experience. HTTPS is also considered as a ranking factor. It gives a secure browsing experience to surfers.50% websites have migrated to HTTPS and most of the website that ranked in the top position of search results is having HTTPS.While doing an audit look if the site has been migrated to HTTPS or not, if not then migrate it to HTTPS and if using HTTPS then check for any issues arising.- Breadcrumbs

Another most important aspect. It provides simple navigation to the user. If your site has many pages or your site is an e-commerce than using breadcrumbs is a good option. If the user wishes to head back to the category of the page, clicking on breadcrumb link list will easily direct them to that page.- URL Structure

A clear and concise URL gives ideas to both readers and robots what the page is about. Make sure your URL is descriptive and includes keywords which tell users about page content before they click on it. Don’t include random numbers, letters, or such things that don’t make any sense.- Internal Links

The last but major point. Internal links are another ways bots use to crawl sites. It acts as navigation for users. Establish information hierarchy of the website. It also passes on the link juice (or page rank) to other pages on site more efficiently.Look for click depth, broken links, redirected links, and orphan pages while auditing internal links.

An XML sitemap helps Google crawl and index your website pages quickly.

You can create a sitemap using one of this plugins: Yoast SEO plugin or Google XML sitemaps plugin.

After creating sitemap submit it in Google Search Console and also include it in your robots.txt file.

Things to consider while making sitemap

- Update your sitemap whenever you add a new page to your website. This will help crawler to identify new content faster.

- Don’t include the URLs that you blocked in the robots.txt file, non- canonical pages and redirect URLs otherwise your sitemap will get ignored by bots.

- Ideally, you can only include 50,000 URLs in your sitemap. You can also create more than one sitemap for a site. For videos, images, blogs, etc you can create different sitemaps.

To view any errors in the sitemap, visit Google Search Console > Crawl > Sitemaps.

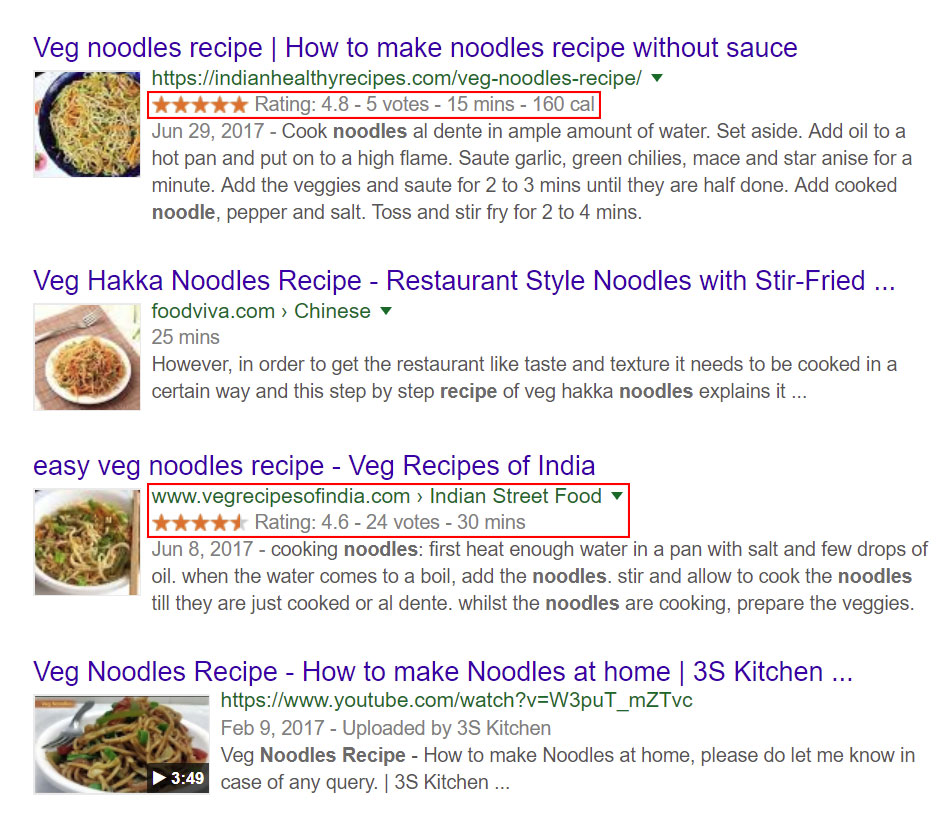

Another most important part to make into consideration is structure data also knows as rich snippets. Structure data helps web crawler to easily identify your content. Also, including structure data displays additional information in search engine results page like stars, total numbers of review, pricing, and so on.

This not only improves your ranking but also let users gain more knowledge about your service and product and hence helps in gaining their trust.

Ways to implement Schema

- Schema.org plugin

- Add your niche related schema tags directly in your HTML code. You can find it in schema.org

- Google’s Markup helper

After implementing rich snippet, use the structured data testing tool to test if it is working or not.

In this fast growing technology pace, slow loading page will result in high bounce rate and low conversions.

Page speed is one of the topmost Google’s ranking factors. A site that loads slower losses ranking on search engine results page. Apart from seeing it as a ranking factor, think about user experience too. As nobody has much time to look at slow loading site and hence, you’ll lose one of your valuable customers.

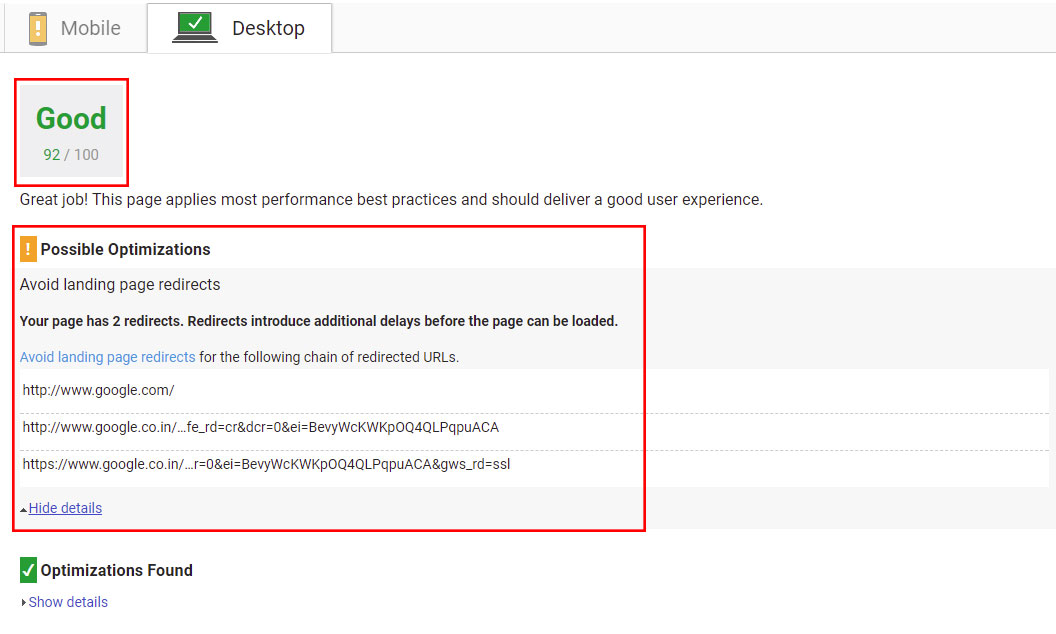

You can use either of following tools: Google page speed tool or GTmetrix to check your website speed. It not only shows page speed but also recommend useful tips to minimize your loading time.

Consider Following Factors to boost your page speed

- Have a better hosting

- Disable unnecessary Plugins

- Optimize Images, CSS and JavaScript files

- Enable browser caching

- Limit Redirects

- Use Content Delivery System (CDN)

Google is switching to “mobile-first index” meaning indexing website based on its mobile version instead of desktop version. Based on this result, it will show at which position your site rank in both desktop and mobile search engine result pages.

While auditing you not only need to take into consideration how web bots access your site but also how mobile crawler will see your site.

Here’s list of things to consider when auditing

- Test your pages mobile-friendliness either with Google’s Mobile-Friendly Test tool or on Search Console “Fetch as Google”.

- Check if website is easily crawled by Google bots using robots.txt testing tool

- Look for mobile usability report in search console to check for any issues

- Keep track on mobile rankings.

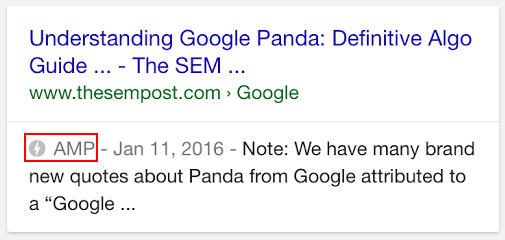

Google rolled out AMP to give the user a better experience. AMP pages load faster than non-AMP pages. This happens because of AMP HTML pages, which you need to create for your site. Hence, this feature makes it important to implement AMP and check it against AMP guidelines.

Things to look after AMP implementation

- You can see how your page looks by adding ”?amp=1” at end of your WebPages. For example www.example.com/?amp=1

- Check AMP report in Google search console to see if AMP pages are indexed or not or if any error has been encountered or else you can test on Google’s AMP Tool

Hope you not only enjoyed reading this post but also found some useful information. Let me know your suggestions and views in the comment section below.

Poorti is an SEO Executive at Effectual Media. She helps business in generating leads organically by bringing their presence on the front pages of search engines. Her expertise is uncovering new opportunities in content marketing and search engine marketing. She is an avid reader of marketing trends.